Former DeepMind Researchers Achieve SOTA in AI Reasoning with Poetiq Meta-System

Six former Google DeepMind researchers and engineers have developed a meta-system that optimizes large language models, achieving state-of-the-art (SOTA) performance on the ARC-AGI-2 leaderboard at half the cost of previous methods. Their startup, Poetiq, focuses on enabling existing large models to autonomously generate strategies and model combinations for specific tasks.

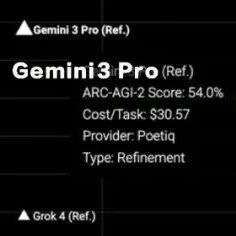

On December 8, the ARC Prize officially validated Poetiq's Gemini 3 Pro optimization technology. The system scored 54% on the ARC-AGI-2 leaderboard with a computational cost of $31 per task. This performance surpasses the previous best, which recorded 45% at a cost of $77.16 per task.

Poetiq's Meta-System Approach

Poetiq's founding team comprises six individuals from Google DeepMind, with a combined 53 years of professional experience. The company aims to "pave the fastest path to safe superintelligence with superior inference." On December 5, Poetiq announced that its system had "significantly surpassed existing methods and established new industry best performance."

The Poetiq system participated in the official ARC Prize evaluation with a pure Gemini configuration. The team stated that their system established a new Pareto frontier on the ARC-AGI-2 public dataset, improving both performance and cost-effectiveness. Poetiq attributes this achievement to its meta-system, which builds intelligence on top of any model.

The meta-system leverages off-the-shelf, cutting-edge models to automatically create a complete system for specific tasks, without requiring the development or fine-tuning of new large models. This capability allowed Poetiq to integrate models like Gemini 3 and GPT-5.1 within hours of their release and achieve SOTA performance.

Performance and Cost Efficiency

The Poetiq meta-system has improved results on both ARC-AGI-1 and ARC-AGI-2, pushing the boundaries of low-cost inference. For example, the Gemini 3 Deep Think (preview version) had higher costs and lower accuracy compared to Poetiq's solutions. Poetiq's configurations, such as Gemini-3-a, b, and c, demonstrate the use of multiple large language models to maximize performance across various cost targets.

The system can programmatically handle ARC-AGI-1 and ARC-AGI-2 problems through multiple calls to Gemini-3, achieving Pareto optimality across a range of computational budgets. Poetiq (Grok-4-Fast), built on the Grok-4-Fast Reasoning model, prioritizes extreme cost efficiency, offering higher accuracy at a lower price than the original model.

Poetiq (GPT-OSS-b), based on the open-source weight model GPT-OSS-120B, achieves notable accuracy at a cost of less than one cent per problem. Another configuration, Poetiq (GPT-OSS-a), uses a low-thought version of GPT-OSS-120B to demonstrate system performance under extreme cost conditions.

A core advantage of this meta-system is its ability to automatically select model combinations and strategies, and to decide autonomously when and which model should write code. Poetiq's recursive, self-improving system operates independently of specific large models.

System Architecture and Future Directions

Poetiq researchers applied their meta-system to models from Google DeepMind, OpenAI, Anthropic, and xAI, consistently achieving a combination of higher accuracy and lower cost. The system uses large language models to build, improve, and run its operations.

Poetiq has open-sourced specific configurations to illustrate two key concepts:

Prompts as Interface: The system views prompts as an interface layer, not the intelligence itself. It engages in a cyclical problem-solving process where the large model generates potential answers, including code, which are then analyzed and refined through feedback.

Self-Checking: The system autonomously monitors its progress, determining when sufficient information is available and results are reliable to conclude the process. This self-monitoring mechanism helps optimize computational resource usage.

Poetiq selected ARC-AGI as a testing ground because it aligns with the system's ability to address complex reasoning tasks where large models often exhibit instability due to prompt dependency and the randomness of knowledge extraction. The system does not pre-set reasoning strategies but allows the model to autonomously discover optimal methods within real-world constraints such as budget, tokens, or computing power.

The system's ability to adapt to task and model characteristics quickly, combined with ARC-AGI's focus on abstract reasoning, induction, logic, and strategy generation, highlights the strengths of the Poetiq system. To ensure continuous evolution, Poetiq's team is expanding the system to tackle more benchmark tasks, covering diverse reasoning and retrieval needs.

The Poetiq system is also designed to collaborate with other systems, allowing it to optimize AI components within existing large frameworks. This approach suggests that leveraging the knowledge in cutting-edge models for long-term tasks without modifying the models themselves, and adapting knowledge extraction mechanisms, could reduce the need for model fine-tuning.