Canvas-to-Image Unifies Multi-Control Image Generation on a Single Canvas

Canvas-to-Image, a new image generation framework, integrates diverse control methods such as identity, pose, and spatial layout onto a unified canvas. This approach allows users to generate high-fidelity, multi-controlled images through intuitive operations, simplifying the creative process by enabling complex compositions within a single interface.

While large diffusion models excel at generating high-quality images, they often encounter difficulties with complex composite scenes. Existing methods frequently suffer from fragmented controls, requiring separate handling for identity, pose, and spatial layout, which complicates coordination. Additionally, interactivity is often limited, with users primarily relying on text descriptions rather than intuitive controls.

Canvas-to-Image addresses these limitations by offering an interactive, controllable generation paradigm. Users can directly overlay multiple visual control prompts on a unified canvas. This includes placing character reference images to specify identity, drawing skeletons to constrain pose, and using bounding boxes to indicate the approximate spatial position of objects or elements. During inference, the model collectively interprets these heterogeneous cues, facilitating coordinated generation under composite control conditions while adhering to text descriptions.

The project and paper details are available at https://snap-research.github.io/canvas-to-image/ and https://arxiv.org/abs/2511.21691, respectively.

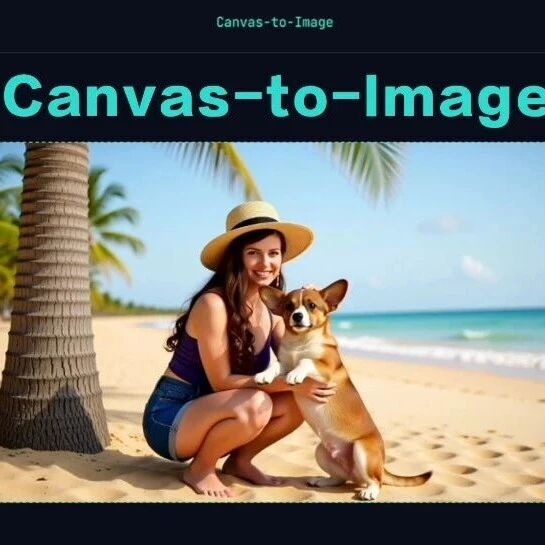

Users can position reference character images anywhere on the canvas, place specific pets nearby, and use bounding boxes to define the general location of elements like hats or palm trees. The model then combines text instructions, such as "a girl petting her dog by the sea," with multimodal canvas prompts to generate photorealistic images that align with semantic logic and visual composition.

The unified canvas enhances the editing process. Modifications, such as swapping a puppy for a cat figurine, changing a palm tree to a parasol, or adjusting a character's pose, only require local replacement or geometric adjustments on the canvas. Canvas-to-Image responds to these local changes without disrupting overall structural consistency, demonstrating efficient multi-control editability. The framework's goal is to integrate these diverse control signals into a single canvas interface, enabling intuitive creation of controllable, personalized content.

Core Technology and Design Philosophy

The core of Canvas-to-Image is a unified RGB canvas designed to integrate multiple heterogeneous control signals into a single representation. Control signals used during training include identity references (placing a person's reference image), pose skeletons (drawing a human skeleton), and bounding boxes (defining object positions). All this information is encoded within the same canvas image. The model utilizes a VLM-Diffusion architecture, based on Qwen-Image-Edit, to interpret and perform visual-spatial reasoning directly.

Single-Control Training, Multi-Control Inference

During the training phase, Canvas-to-Image simplifies the process by randomly adopting one control modality—spatial, pose, or bounding box—for each sample. This means the model does not encounter combinations of multiple controls during training.

However, during the inference phase, users can freely combine multiple control methods for complex multi-control generation. The model learns to understand "identity," "pose," and "position" separately during training but can naturally integrate all three during inference. This "emergent capability" allows the model to produce high-quality results even with previously unseen combined controls, simplifying training complexity while ensuring flexibility during inference.

Experimental Results

Canvas-to-Image demonstrates strong performance in multi-control, high-fidelity, and complex compositions. It can simultaneously manage identity, pose, and layout boxes, an area where baseline methods often fail. In complex multi-control scenarios, Canvas-to-Image accurately executes pose and position constraints, maintains stable character identity features, and generates images with clear structure and semantic consistency when multiple constraints are present.

The framework also supports combining specific characters with objects across various scenarios, maintaining better consistency for both characters and objects compared to baseline methods. With an input background image, Canvas-to-Image can naturally embed new subjects into the scene based on reference image pasting or bounding box annotations. Through the unified canvas expression, the model generates synthetic images with reasonable geometric relationships, matching lighting, and semantic consistency, significantly improving the quality of character or object integration into a scene.

Ablation Study Findings

Researchers systematically evaluated the model's performance as controls were incrementally added. With only identity control, the model generated characters but did not follow pose control or understand position boxes. Adding pose control enabled the model to manage identity and pose simultaneously, also improving its robustness with position boxes, even without prior training on them. This indicated a synergistic effect of multi-task learning. With spatial layout added, the model achieved full control over identity, pose, and position. A key finding was that despite single-task canvas training, the model naturally learned to combine multiple controls during inference, validating its design philosophy.

Canvas-to-Image advances composable generation from fragmented controls to a unified canvas, allowing users to complete all creative tasks within a single interface. This multi-modal control paradigm, centered on the "unified canvas," is poised to become a foundational element for future AI creative tools.