BAAI Introduces Emu3.5: A Multimodal World Model Predicting Future States

A shift in human-computer interaction is emerging as the Beijing Academy of Artificial Intelligence (BAAI) introduces Emu3.5, a new multimodal world model. This model is designed not merely as an image generator but as a system capable of understanding spatial relationships, inferring temporal changes, and predicting future events in the real world.

Context

Traditional image generation models often operate without a concept of time, action, or causality, limiting them to static scene creation. These models typically lack an understanding of physical laws and causal relationships, which are fundamental to human perception. For instance, humans instinctively understand that pushing a cup off a table will cause it to fall and potentially break, a form of world understanding that many current AI models cannot replicate. They struggle with spatial structure, temporal continuity, and causal links, hindering their ability to perform real-world tasks. Emu3.5 aims to bridge this gap, moving AI from content generation to world comprehension and task execution.

Key Points

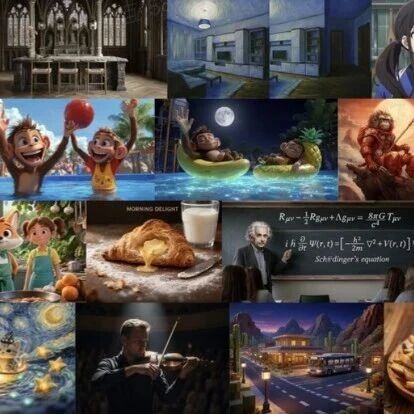

Emu3.5's capabilities extend beyond conventional text-to-image generation:

Interactive World Building: The model can generatively create interactive 3D environments, allowing for first-person exploration based on textual prompts. This includes generating realistic perspectives and environmental evolution, such as navigating a modern living room or exploring historical sites like the Temple of Heaven.

Embodied Intelligence (Visual Guidance): Emu3.5 can break down complex tasks into sequential steps, providing image-based explanations for each. This is demonstrated by its ability to generate instructions for tasks like folding clothes, ensuring logical sequencing and common-sense actions, which is a prerequisite for robotic understanding.

Instructional Visual Generation: The AI can understand and generate step-by-step instructional images, such as a visual guide for cooking a recipe, illustrating each stage of the process.

Under the Hood

Emu3.5 employs a unified Transformer model architecture to process data across all modalities. In contrast to approaches that utilize separate models for images, text, and video, Emu3.5 integrates these into a single system. Multimodal information—images, text, and video—is first converted into tokens. The model then learns relationships between these modalities by predicting the next token. The core task is unified as Next-State Prediction (NSP), which involves predicting the subsequent world state, encompassing both visual and linguistic elements.

From a structural standpoint, Emu3.5 treats all inputs—whether an image, a sentence, or a video frame—as "world building blocks." Its primary function is to predict the next block. If the next block is text, it completes the text; if it's a video frame, it completes the action; if it's a result, it infers world changes. This means predicting the next token is equivalent to predicting the "next second of the world," shifting the focus from "completing content" to "completing the world."

By comparison, previous AI models, such as GPT, learned by predicting the next word, primarily focusing on linguistic patterns. Emu3.5, however, predicts what the world will look like in the next second. For example, if presented with "The cat is running towards the mouse," it predicts the subsequent scene where the cat is closer to the mouse, and the mouse is fleeing. This method allows the model to learn causality and physics, understanding that actions have consequences and the world changes dynamically.

The model's training data includes approximately 790 years of video duration, providing a rich foundation for world cognition. Video, as a digital record of reality, simultaneously conveys information about time, space, physics, causality, and intent—the five essential elements of the world. This extensive video training enables Emu3.5 to learn real-world experience rather than just a collection of static images.

In practice, generation speed has been significantly improved through DiDA parallel prediction technology, increasing speed by approximately 20 times. This marks a notable achievement, as it is the first time an autoregressive model has attained speeds comparable to diffusion models.

Notable Details

While some advanced features like 3D world generation and embodied intelligence are not yet publicly available for testing, Emu3.5's multimodal understanding and state prediction capabilities have been evaluated. The model demonstrates an ability to simulate physical laws and causal relationships in image generation and editing. For instance, given an image of a child with a balloon and the prompt "The child's balloon accidentally flew away," Emu3.5 can generate the subsequent image depicting the balloon floating away. Similarly, it can predict the blooming of a flower or the appearance of a tree laden with fruit in autumn, reflecting developmental laws and causal relationships.

However, while models like GPT and Gemini can also predict outcomes based on common sense (e.g., a ball rolling off a table will fall), their predictions are primarily linguistic. Emu3.5's Next-State Prediction (NSP) goes further by generating the physical process of the ball falling, demonstrating an understanding of gravity, time, and laws of motion. This highlights the distinction between linguistic logical inference and physical dynamic prediction.

Tests also indicate that Emu3.5 performs well in generating anime effects and realistic product photography. However, there remains a gap in portrait and text rendering compared to mainstream image models. The model often requires complex and detailed prompts, suggesting further room for improvement in its image generation capabilities. Basic image editing functions, including precise editing, style transfer, 3D figurine creation, and image coloring, are commendable due to the model's understanding of the physical world, though they still lag behind top-tier image models.

Outlook

Emu3.5 represents a cutting-edge concept that addresses a fundamental question: Can AI truly understand the world? The model introduces several innovations, including:

Shifted Training Objective: Moving from predicting the next sentence to predicting the next second of the world, enabling AI to learn causality and physical logic beyond static results.

Video-Centric Training: Utilizing long videos as primary training material to capture time, action, and change, which are crucial carriers of real-world knowledge.

Unified Model Architecture: Processing text, images, and video within a single model to integrate reasoning, generation, and expression capabilities.

These directions focus on fundamental intelligence capabilities rather than short-term effects. However, challenges remain, including visual quality stability and occasional errors in temporal reasoning. The full impact of interactive exploration features is yet to be seen, as they are not yet accessible. While Emu3.5 is still in its early stages, it represents a promising exploration into developing AI that genuinely comprehends the world and can interact with it effectively.